Mosiki, the Modest wiki. Early tech choices

(In this blog series I attempt to develop a wiki server that tries to be respectful of computer resources, as part of a self-assigned Modest Programming Challenge, the series starts here)

Alright so I want to develop a wiki— which for now I shall call Mosiki— with speed and lightness. A sensible first question is what am I gonna be writing this in? The tried-and-true high performance language C++? The classic and original C? Or perhaps rewrite all of modern software itself in Rust?

But no, I'm probably just gonna use Python.

Let's just drop our standards way low now

Now, there's a chance someone saw the first post and went "YES! You're right! All these lumberjack programmers need to stop using JS and transition to something actually capable of making good utilization of resources!" and that sort of person would now promptly call me out for being a poser right now.

I do want to clarify, if you want to truly approach the height of what is really possible with a computer, then you're almost certainly going to want to use a statically compiled language. One where you can reason about how your program is actually going to work in memory, care about cache locality, and maybe break out some SIMD instructions. Certainly if your language has some kind of runtime, uses garbage collection, and a JIT, it's literally doing more than is necessary to actually just run the program, of course it's not going to be as resource efficient as something that isn't doing that stuff.

(Click here for an optional tangent about Zig)

I actually quite like the idea of programming a web-server in Zig. Specifically because of it's allocator system. Zig strives to not do any memory allocation without your knowledge. If a standard-library function allocates memory, you have to pass an allocator to it and this allows you to do some thoughtful optimizations. In particular, I actually really like the idea of having one allocator per request, because then you can trivially track how much memory the request uses and if it passes a threshold, just kill it. A good feature for server reliability.

But no. I'm not gonna use one of those languages. I'm not even gonna use one of the fast JIT languages. I'm gonna try Python. The thing with the damn Global Interpreter Lock that has prevented good multiprocessing for years. Why would I do this? There's several reasons.

- Speed of development. It's pretty fast to hack something up in Python.

- It helps with one of the project's major goals: be easily modifiable. Python is widely understood and considered unintimidiating. If I wrote it in a manual memory management language some would (probably quite rightly) feel concerned that if they modified it they'd introduce some variety of security error. It also has a nice tweaking experience: just open the damn file and edit it and run the program, no needing to set up a complex dev environment.

- It's just a wiki, I mean come on.

I'm serious about that last one. A wiki is a simple CRUD app that takes a markup and renders static pages. It should be largely I/O-bound. Python is computationally slow but what computations is this thing going to even be doing?

I think that while yes a lot of Modesty™ can be found in choosing the right programming language, I think you can also achieve a lot just via careful programming, and mindful solution choices. People don't check their memory usage, people reach for large complex solutions to problems that they just don't have. I want to test the idea that people don't have to change absolutely everything about the way they program to end up with better software. I'm going for modest not optimal.

A thing I think more people should care about though is choice of dependencies. Speaking of which...

You should consider SQLite for your website

For those of you unaware, let me introduce you to SQLite. SQLite is the most deployed SQL DB, estimated at over a trillion instances. It's used in aeroplanes and is tested to that industry's standard. The devs care about it's size, speed, backwards compatibility, and reliability. They've pledged compatibility until the year 2050. Python comes with bindings for it in the standard library. And it will be the storage mechanism for Mosiki.

It's considered an unorthodox choice for a webserver DB but that's actually not outside of it's goals; the SQLite website itself uses SQLite as its backing, and cites it being a good choice for small-to-medium traffic servers. So should be perfect for my target use case. It also should lower the spec requirements a lot compared to using something like Postgres.

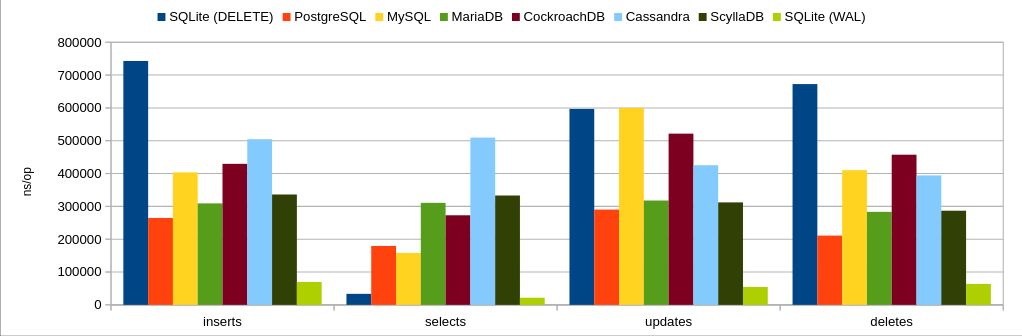

Additionally, SQLite is actually quite fast. DokuWiki just uses files but SQLite will probably be faster than I ever will be at that. And what may come as a shock: SQLite is actually faster than typical DBs, at least for simple cases with low write-concurrency.

(Source: https://sj14.gitlab.io/post/2018/12-22-dbbench/)

(Source: https://sj14.gitlab.io/post/2018/12-22-dbbench/)

By turning on one setting, Write-Ahead Log mode, SQLite outshines popular web server dbs for simple cases. And this really shouldn't be that suprising because it's just doing less work than typical DBs. There's no server, no seperate process, it's an API that reads and writes data to a file in an atomic ACID-conforming way. And yes it won't handle the same sort of concurrent writer load as Postgres but I don't have that problem, and neither do many websites.

If there's anything you take away from this post, it should be that you should consider SQLite a real option for web-servers.

Markdown

So I need a markup for Mosiki. I could invent one. But honestly, why? Markdown is pretty good. It's pretty simple. And there's highly tested implementations. markdown-it-py is the one you're meant to use for Python these days. It has some HTML safety features, they've benchmarked it against other solutions, it is even extensible. There's a footnote extension, and I'll definitely use that. Overall, seems like a reasonable solution that even allows for some expansion in the future. I am trying to be careful about dependencies. I don't want to accumulate 1000s of dependencies NPM-style. But this seems like a reasonable choice, and worst-case scenario it could always be replaced with an even lighter Markdown parser.

The troubles with security

An area of conflict I wasn't expecting for this project was between Modesty and Security. I decided to look into state of the art password encryption and found that part of it now is deliberately high memory usage. That sounds weird at first but it makes sense on further pondering. Computers these days can do a whole lot of work in parallel, particularly on GPUs. If you want to make sure that your encryption method is safe from large scale brute force attacks you want to make sure it's hard to defeat through all our new parallelized programming power.

And the logical way to do that is to simply use memory. Parallelization has improved much faster than memory sizes. Argon2 is seemingly the state of the art these days. And it's primary recommended setting uses 2GB of RAM. Good luck launching a parallelized brute-force attack against that. It does have a low-memory recommendation that uses 64MB but... honestly that still feels a bit much? I'm trying to target 512MB VPSs here, so 64MB is a lot. But I'm not a security expert, and so I probably shouldn't tamper. I can make a lock and limit it to verifying one login at a time which should make it fine for my use case.

(An optional tangent about an entirely other solution)

So what's the easiest way to get around good security being RAM expensive? Plain text password storage!

Yes that was a joke. Please don't ever do that. No, the alternative that occured to me is... maybe don't use passwords? Hypothetically you could just use some email-you-a-code solution, and not ask the user to trust you with a password at all. Although that would mean requiring some sort of mail server, which is somewhat of a complicating matter. You could also use some SSO which obviously involves cryptography but shouldn't be as burdensome. But then you have to saddle people with some SSO restriction. Tough trade-off.

Flask? Maybe?

Flask is a webframework for Python that clearly had some amount of Modesty™ in it's design. It's not trying to do everything but rather be an alright starting point and let people solve the rest of it with extensions if they want. It comes with a templating library, a request life-cycle, routing, some logging stuff, and really not much else. It has about 17k lines to it so it's far from the worst. Strictly speaking I think I could likely not use this and go for something a even more barebones, but it seems like a reasonable-enough starting point. Django is the "industry standard" Python web framework and is huge. ~400K lines to Flask's ~17K. Bottle is another one, quite like Flask to my understanding, and even smaller, has no dependencies. But is fairly niche and doesn't seem the most actively developed. Quart was another Flask-like that is smaller than the original and also uses asyncio (more on that later) but it's also in very low development. I'll go with Flask for now, but moving to Bottle or Quart could make sense.

Gunicorn? Gevent? Eventlet? Stackless? Reverse proxies?

The Python ecosystem has a lot of web server options and even an entire web server interface standard called WSGI. I have been doing a lot of reading and comparing options. Flask comes with a development server, but it's not seriously tested as a production HTTP solution and so you can't complain if it has some obscure vulnerabilities and problems.

A common setup seems to be Nginx->Gunicorn->Flask. Nginx acts as a reverse-proxy: a server that will act as a bouncer. It's highly tested for security and will provide buffering and other features that can be used to improve stability. I can find benchmarks of it handling 10 thousand concurrent connections with 18 MB of RAM so it should be light enough for my case. I was tempted by lighttpd but I decided to go with the more known thing. Gunicorn provides a worker-model and some supervision but honestly, I probably will be single threaded and don't care about the worker model, so screw it, I won't use Gunicorn. I'm not hugely convinced it'll add much other than complexity.

Additionally, Python has a number of things to help with doing a crap tonne of requests. Particularly they make requests asynchronous via things like coroutines or green-threads, basically light-weight alternatives to threads that you can scale up into the thousands or even tens of thousands. There's a lot of options in this space, Stackless, Gevent, Eventlet, Asyncio. Asyncio is in the standard library and will likely be the popular one in the long term.

Now, I don't really plan to have 1000s of users at anytime for Mosiki, but I think it's good to try and be reliable. Additionally, I'm hoping these systems will help prevent stalls caused by slow I/O requests from one user impacting every other user. For now, I will try using Gevent because it's quite easy to add to a flask program and Eventlet didn't want to work for whatever reason so I can't really compare.

Now, perhaps one of the technologies I have chosen to use is a bad bet. But the beauty of making a Modest Program is that you try to keep the code so simple that anyone with familiarity with the programming language can quite easily maintain it themselves. If I've made a poor choice for Mosiki, it should be easy to change later.

Testing time

So my flask+Gevent server with a WIP Markdown page renderer pulling pages from SQLite consumes 35 MB at idle. Combined with nginx doing its 18MB from that benchmark earlier and let's assume Argon2 is using that 64MB setting. That's 117 MB so far on the server stack. And over half of it simply reserved for security reasons.

Alpine Linux is a Linux distribution designed to be tiny and secure. It's based around the impressive musl and busybox projects (I'll let you research those if you feel like it). At boot it uses 41 MB in a virtual machine, at least it does if you're in text only mode. If you're trying to go for an extremely cheap server, using such a distribution helps. For the sake of safety, let's double it's memory usage to 82 MB for my equation. 82+117=199 MB of RAM. That does feel a little on the high side of what is likely hardware-optimal, but let's see how it scales under load simulation. I chose to use hey as my benchmark tool for no particular reason.

20 Concurrent Users doing 2000 (total) simple page loads

Summary:

Total: 3.1373 secs

Slowest: 0.0536 secs

Fastest: 0.0118 secs

Average: 0.0312 secs

Requests/sec: 637.4907

Total data: 2452000 bytes

Size/request: 1226 bytes

Response time histogram:

0.012 [1] |

0.016 [1] |

0.020 [2] |

0.024 [2] |

0.029 [2] |

0.033 [1650] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.037 [246] |■■■■■■

0.041 [58] |■

0.045 [24] |■

0.049 [11] |

0.054 [3] |

Latency distribution:

10% in 0.0291 secs

25% in 0.0296 secs

50% in 0.0304 secs

75% in 0.0315 secs

90% in 0.0345 secs

95% in 0.0368 secs

99% in 0.0438 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0000 secs, 0.0118 secs, 0.0536 secs

DNS-lookup: 0.0000 secs, 0.0000 secs, 0.0003 secs

req write: 0.0000 secs, 0.0000 secs, 0.0002 secs

resp wait: 0.0311 secs, 0.0115 secs, 0.0533 secs

resp read: 0.0000 secs, 0.0000 secs, 0.0003 secs

Status code distribution:

[200] 2000 responses

So, at 20 concurrent users, probably quite high for the intended use case, this simple page load is basically instant by internet standards. I saw no real perceptible memory increase. Now, let's try a more proper stress test.

1000 Concurrent Users doing 2000 (total) simple page loads

Summary:

Total: 7.4600 secs

Slowest: 7.3279 secs

Fastest: 0.0631 secs

Average: 1.3052 secs

Requests/sec: 268.0980

Total data: 2452000 bytes

Size/request: 1226 bytes

Response time histogram:

0.063 [1] |

0.790 [1016] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

1.516 [303] |■■■■■■■■■■■■

2.243 [310] |■■■■■■■■■■■■

2.969 [41] |■■

3.695 [271] |■■■■■■■■■■■

4.422 [41] |■■

5.148 [0] |

5.875 [0] |

6.601 [0] |

7.328 [17] |■

Latency distribution:

10% in 0.2357 secs

25% in 0.3473 secs

50% in 0.5573 secs

75% in 1.9608 secs

90% in 3.3497 secs

95% in 3.5311 secs

99% in 3.7537 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0201 secs, 0.0631 secs, 7.3279 secs

DNS-lookup: 0.0028 secs, 0.0000 secs, 0.0582 secs

req write: 0.0021 secs, 0.0000 secs, 0.0471 secs

resp wait: 1.2688 secs, 0.0110 secs, 7.3278 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0025 secs

Status code distribution:

[200] 2000 responses

Obviously the average load speed here gets a bit bad, but it handled it, didn't max out a CPU core, and most importantly, memory usage didn't go nuts. Nginx seemed to end up only using about 15 MB, and my flask+Gevent server got to 36 MB, 1MB greater than idle. Setting aside some memory for Argon2 and my Alpine ballpark with it's extra sapce, that's 197MB of RAM for what is likely a very high load scenario for what I'm making. Scaling well so far then. That 1 MB increase conveniently is around about the number of current users times the payload size, I suspect that is not an accident. If I keep my page sizes small, via tightly curated CSS, no JS, and sensible image formats such as PNG, then this all should scale quite well.

Weirdly, the peak memory usage according to grep ^VmPeak /proc/pid/status of the flask+Gevent server was around 215 MB but it has that peak seemingly immediately. I know Gevent does monkey patching on the standard library to work, and maybe that causes an initial memory surge, which sucks, but it's probably managable. The server is possibly still usable on a 256 MB RAM VPS with some Swap?

Point is though, memory usage seems low and so far the Python bit is only 36MB. You can only really improve the RAM substantially by using an even tinier OS, or compromising on password security. All of the Modesty so far has been about careful choices in what I'll build on; trying to add things that seem like good bang-for-buck and avoid things that seem like too much for my problem. I did a lot of looking around online for benchmarks where possible. So far, I think it's working out quite alright.

Goodbye for now

My work on Mosiki is no where near done; it's basically a simple markdown-from-sqlite renderer at the moment. And as I flesh it out more I'll be able to make tests with more representative pages. In theory you could benchmark an edit work load, but honestly I don't think that's likely very relevant to my use case? I'll probably do it anyway for completeness sake.

The next post I do in this series I'll show off where Mosiki is at feature wise, go over some random engineering decisions I made and some actual code. It'll probably be a post less relevant to the performance goals of Mosiki.

Thanks again for reading. If you have any comments, questions, or suggestions feel free to reach out.